__________ Can Cause Both Shaking, Restless Movements and Slow, Imbalanced Ones.

SMOTE for Imbalanced Classification with Python

Last Updated on March 17, 2021

Imbalanced nomenclature involves developing predictive models on classification datasets that accept a severe form imbalance.

The claiming of working with imbalanced datasets is that well-nigh auto learning techniques volition ignore, and in plough take poor performance on, the minority class, although typically it is functioning on the minority form that is most of import.

I arroyo to addressing imbalanced datasets is to oversample the minority grade. The simplest approach involves duplicating examples in the minority grade, although these examples don't add any new information to the model. Instead, new examples can be synthesized from the existing examples. This is a type of data augmentation for the minority class and is referred to equally the Synthetic Minority Oversampling Technique, or SMOTE for brusque.

In this tutorial, you will discover the SMOTE for oversampling imbalanced nomenclature datasets.

Later completing this tutorial, you will know:

- How the SMOTE synthesizes new examples for the minority class.

- How to correctly fit and evaluate motorcar learning models on SMOTE-transformed training datasets.

- How to utilise extensions of the SMOTE that generate constructed examples along the course decision boundary.

Kick-start your projection with my new book Imbalanced Classification with Python, including step-by-step tutorials and the Python source code files for all examples.

Let'south go started.

- Updated January/2021: Updated links for API documentation.

SMOTE Oversampling for Imbalanced Classification with Python

Photo by Victor U, some rights reserved.

Tutorial Overview

This tutorial is divided into 5 parts; they are:

- Synthetic Minority Oversampling Technique

- Imbalanced-Acquire Library

- SMOTE for Balancing Data

- SMOTE for Nomenclature

- SMOTE With Selective Constructed Sample Generation

- Deadline-SMOTE

- Borderline-SMOTE SVM

- Adaptive Synthetic Sampling (ADASYN)

Synthetic Minority Oversampling Technique

A problem with imbalanced classification is that at that place are likewise few examples of the minority course for a model to finer learn the decision boundary.

One style to solve this problem is to oversample the examples in the minority class. This can exist achieved past simply duplicating examples from the minority grade in the training dataset prior to plumbing fixtures a model. This tin can balance the class distribution but does not provide whatever boosted information to the model.

An comeback on duplicating examples from the minority class is to synthesize new examples from the minority form. This is a type of information augmentation for tabular data and tin be very effective.

Possibly the virtually widely used arroyo to synthesizing new examples is called the Synthetic Minority Oversampling TEchnique, or SMOTE for short. This technique was described past Nitesh Chawla, et al. in their 2002 paper named for the technique titled "SMOTE: Synthetic Minority Over-sampling Technique."

SMOTE works past selecting examples that are close in the feature space, cartoon a line between the examples in the feature space and drawing a new sample at a point forth that line.

Specifically, a random example from the minority course is get-go called. Then k of the nearest neighbors for that example are found (typically k=5). A randomly selected neighbour is chosen and a synthetic example is created at a randomly selected bespeak between the two examples in characteristic infinite.

… SMOTE first selects a minority course instance a at random and finds its k nearest minority course neighbors. The constructed instance is and then created by choosing i of the chiliad nearest neighbors b at random and connecting a and b to form a line segment in the feature space. The constructed instances are generated equally a convex combination of the two chosen instances a and b.

— Page 47, Imbalanced Learning: Foundations, Algorithms, and Applications, 2013.

This procedure can exist used to create equally many synthetic examples for the minority grade as are required. As described in the paper, it suggests first using random undersampling to trim the number of examples in the majority class, and so use SMOTE to oversample the minority course to balance the form distribution.

The combination of SMOTE and under-sampling performs meliorate than plainly under-sampling.

— SMOTE: Synthetic Minority Over-sampling Technique, 2011.

The approach is effective because new synthetic examples from the minority class are created that are plausible, that is, are relatively shut in feature space to existing examples from the minority course.

Our method of constructed over-sampling works to cause the classifier to build larger decision regions that comprise nearby minority form points.

— SMOTE: Synthetic Minority Over-sampling Technique, 2011.

A general downside of the approach is that synthetic examples are created without because the bulk class, mayhap resulting in ambiguous examples if in that location is a stiff overlap for the classes.

Now that we are familiar with the technique, let's expect at a worked example for an imbalanced classification problem.

Imbalanced-Learn Library

In these examples, nosotros volition use the implementations provided by the imbalanced-acquire Python library, which can be installed via pip as follows:

| sudo pip install imbalanced-learn |

You can confirm that the installation was successful by printing the version of the installed library:

| # bank check version number import imblearn print ( imblearn . __version__ ) |

Running the instance will print the version number of the installed library; for example:

Want to Get Started With Imbalance Classification?

Accept my free seven-day email crash grade at present (with sample code).

Click to sign-up and also go a costless PDF Ebook version of the course.

SMOTE for Balancing Data

In this section, we will develop an intuition for the SMOTE by applying it to an imbalanced binary nomenclature trouble.

First, we tin can utilize the make_classification() scikit-larn part to create a synthetic binary classification dataset with 10,000 examples and a ane:100 class distribution.

| . . . # define dataset 10 , y = make_classification ( n_samples = 10000 , n_features = 2 , n_redundant = 0 , n_clusters_per_class = 1 , weights = [ 0.99 ] , flip_y = 0 , random_state = 1 ) |

We tin can utilize the Counter object to summarize the number of examples in each class to confirm the dataset was created correctly.

| . . . # summarize class distribution counter = Counter ( y ) print ( counter ) |

Finally, we tin can create a scatter plot of the dataset and color the examples for each class a different color to conspicuously come across the spatial nature of the class imbalance.

| . . . # scatter plot of examples by grade label for label , _ in counter . items ( ) : row_ix = where ( y == label ) [ 0 ] pyplot . scatter ( 10 [ row_ix , 0 ] , X [ row_ix , 1 ] , characterization = str ( label ) ) pyplot . fable ( ) pyplot . bear witness ( ) |

Tying this all together, the complete example of generating and plotting a synthetic binary classification problem is listed below.

| 1 two three 4 5 vi 7 eight ix 10 11 12 13 fourteen fifteen xvi 17 | # Generate and plot a synthetic imbalanced classification dataset from collections import Counter from sklearn . datasets import make_classification from matplotlib import pyplot from numpy import where # define dataset X , y = make_classification ( n_samples = 10000 , n_features = 2 , n_redundant = 0 , n_clusters_per_class = i , weights = [ 0.99 ] , flip_y = 0 , random_state = i ) # summarize class distribution counter = Counter ( y ) print ( counter ) # besprinkle plot of examples past class label for label , _ in counter . items ( ) : row_ix = where ( y == characterization ) [ 0 ] pyplot . scatter ( X [ row_ix , 0 ] , 10 [ row_ix , 1 ] , characterization = str ( characterization ) ) pyplot . legend ( ) pyplot . show ( ) |

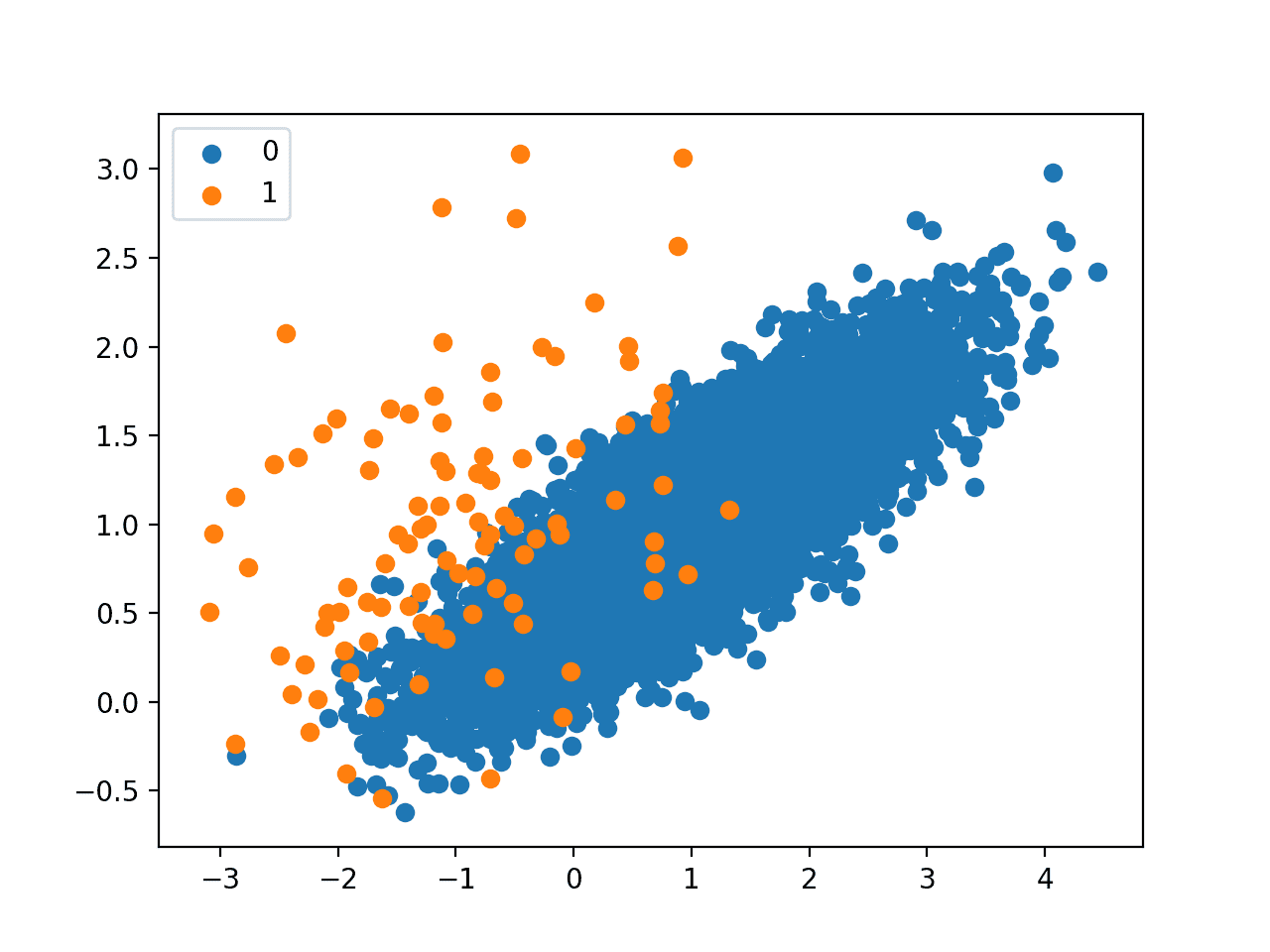

Running the example first summarizes the form distribution, confirms the 1:100 ratio, in this case with virtually 9,900 examples in the majority class and 100 in the minority class.

| Counter({0: 9900, ane: 100}) |

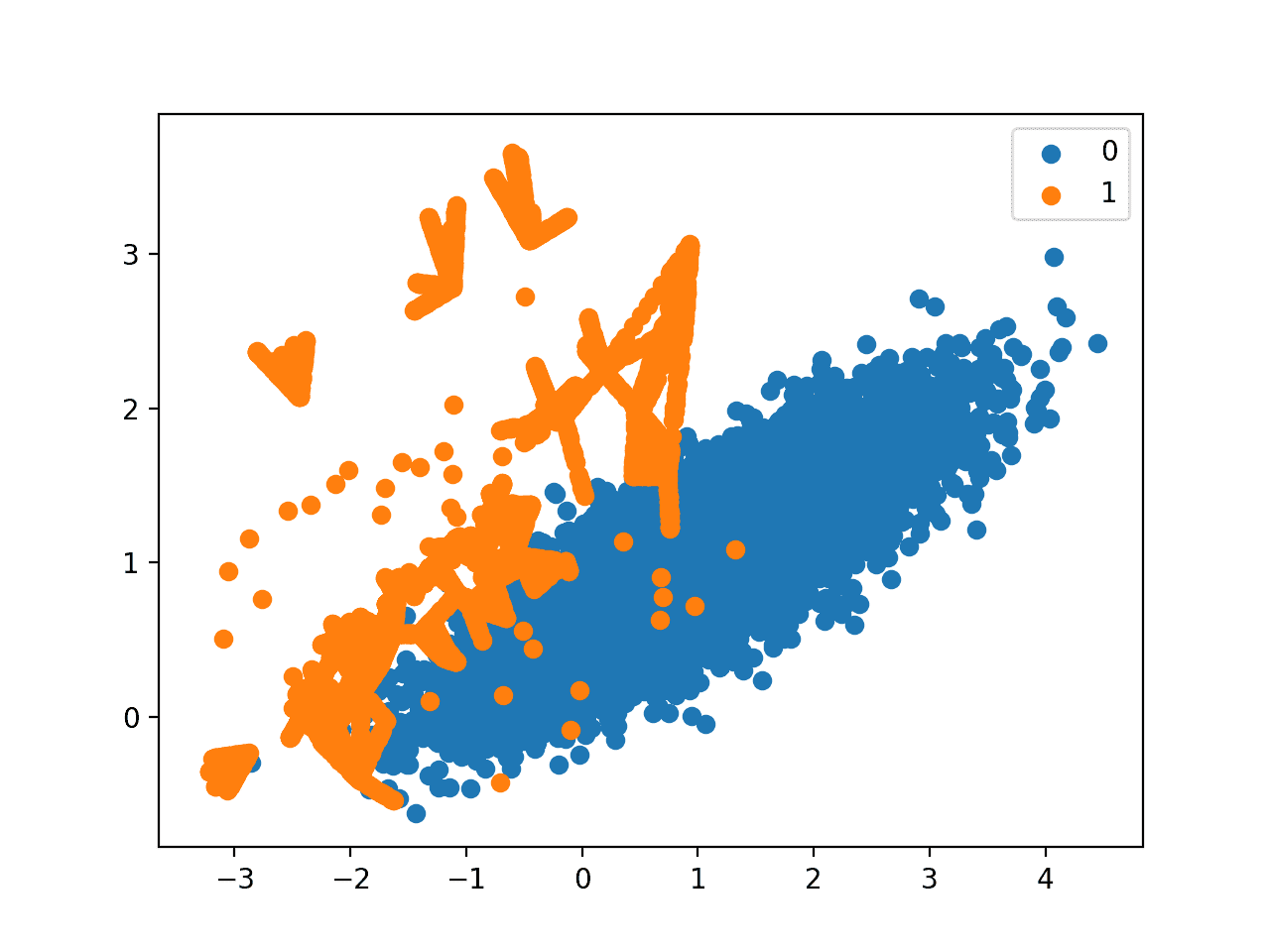

A scatter plot of the dataset is created showing the large mass of points that vest to the majority class (bluish) and a small-scale number of points spread out for the minority class (orange). Nosotros can see some measure of overlap between the two classes.

Scatter Plot of Imbalanced Binary Classification Problem

Side by side, we can oversample the minority class using SMOTE and plot the transformed dataset.

We tin use the SMOTE implementation provided by the imbalanced-larn Python library in the SMOTE course.

The SMOTE class acts similar a data transform object from scikit-learn in that it must be defined and configured, fit on a dataset, and then applied to create a new transformed version of the dataset.

For instance, we tin define a SMOTE instance with default parameters that will rest the minority class so fit and utilize it in ane step to create a transformed version of our dataset.

| . . . # transform the dataset oversample = SMOTE ( ) Ten , y = oversample . fit_resample ( 10 , y ) |

Once transformed, nosotros can summarize the class distribution of the new transformed dataset, which would expect to now exist balanced through the creation of many new synthetic examples in the minority class.

| . . . # summarize the new class distribution counter = Counter ( y ) print ( counter ) |

A scatter plot of the transformed dataset tin can also be created and nosotros would expect to see many more examples for the minority class on lines between the original examples in the minority course.

Tying this together, the complete examples of applying SMOTE to the synthetic dataset and then summarizing and plotting the transformed effect is listed below.

| 1 2 iii 4 5 half-dozen seven viii ix x 11 12 thirteen 14 15 16 17 18 nineteen twenty 21 22 23 24 | # Oversample and plot imbalanced dataset with SMOTE from collections import Counter from sklearn . datasets import make_classification from imblearn . over_sampling import SMOTE from matplotlib import pyplot from numpy import where # define dataset X , y = make_classification ( n_samples = 10000 , n_features = two , n_redundant = 0 , n_clusters_per_class = 1 , weights = [ 0.99 ] , flip_y = 0 , random_state = 1 ) # summarize class distribution counter = Counter ( y ) print ( counter ) # transform the dataset oversample = SMOTE ( ) X , y = oversample . fit_resample ( X , y ) # summarize the new class distribution counter = Counter ( y ) print ( counter ) # scatter plot of examples by class characterization for characterization , _ in counter . items ( ) : row_ix = where ( y == label ) [ 0 ] pyplot . scatter ( X [ row_ix , 0 ] , X [ row_ix , 1 ] , label = str ( label ) ) pyplot . legend ( ) pyplot . show ( ) |

Running the example kickoff creates the dataset and summarizes the class distribution, showing the 1:100 ratio.

And then the dataset is transformed using the SMOTE and the new class distribution is summarized, showing a balanced distribution now with 9,900 examples in the minority class.

| Counter({0: 9900, one: 100}) Counter({0: 9900, one: 9900}) |

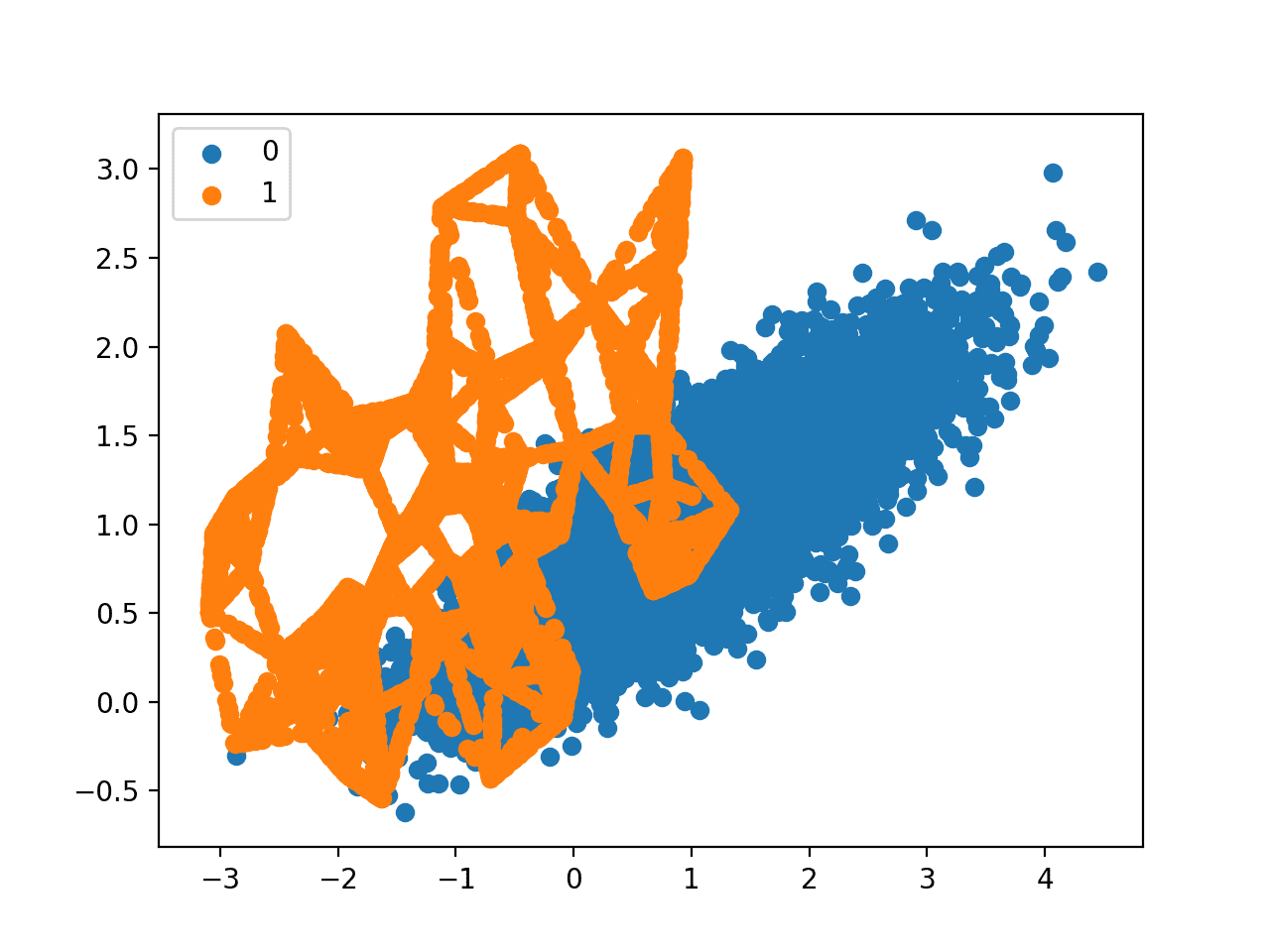

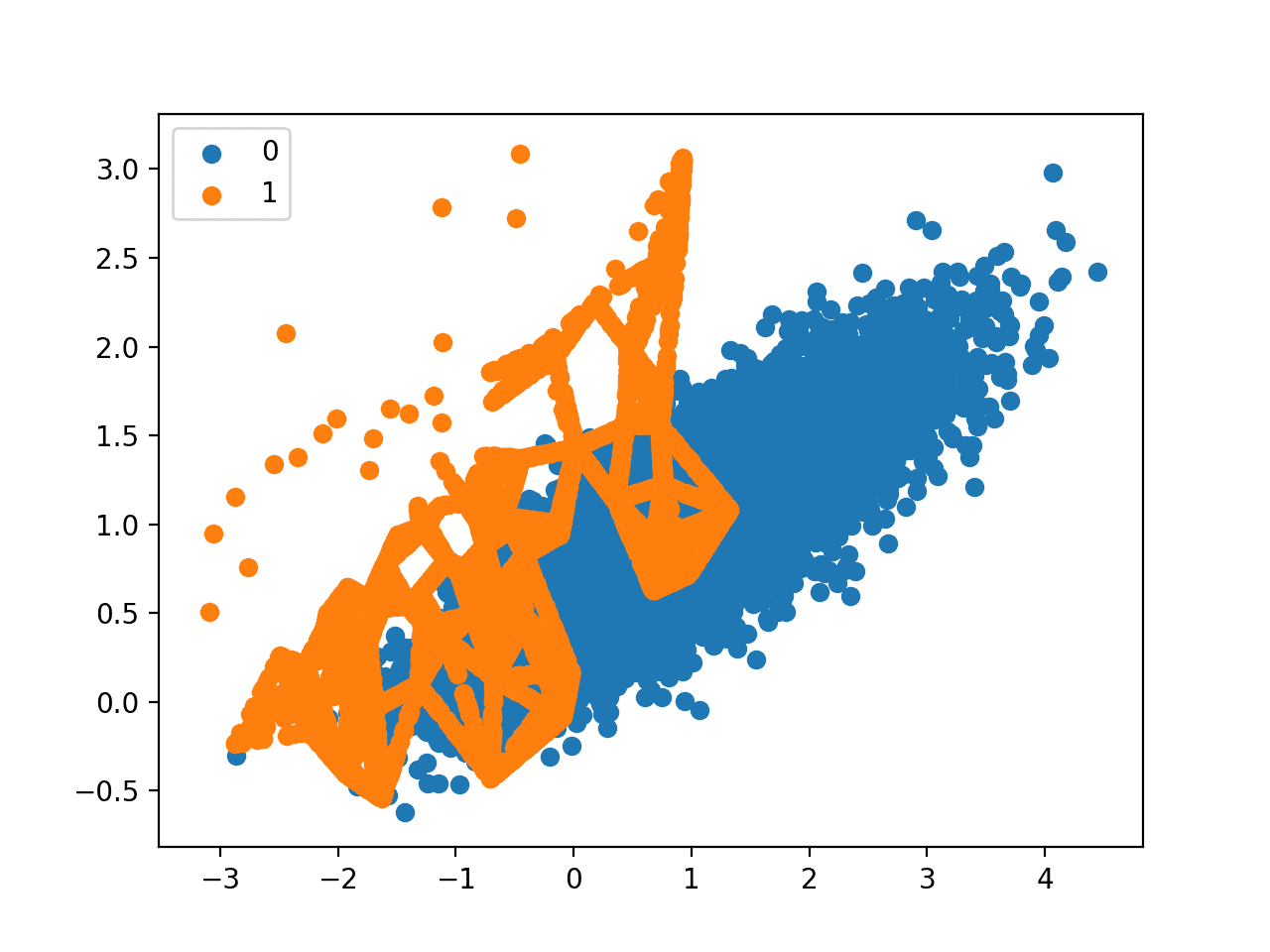

Finally, a scatter plot of the transformed dataset is created.

Information technology shows many more examples in the minority class created forth the lines betwixt the original examples in the minority class.

Besprinkle Plot of Imbalanced Binary Nomenclature Problem Transformed past SMOTE

The original newspaper on SMOTE suggested combining SMOTE with random undersampling of the bulk course.

The imbalanced-learn library supports random undersampling via the RandomUnderSampler course.

We tin update the instance to first oversample the minority course to have x percent the number of examples of the majority grade (e.g. about 1,000), then utilize random undersampling to reduce the number of examples in the majority course to take 50 percent more than the minority class (east.thousand. well-nigh 2,000).

To implement this, we tin specify the desired ratios as arguments to the SMOTE and RandomUnderSampler classes; for instance:

| . . . over = SMOTE ( sampling_strategy = 0.1 ) under = RandomUnderSampler ( sampling_strategy = 0.5 ) |

Nosotros can then chain these ii transforms together into a Pipeline.

The Pipeline can and so be applied to a dataset, performing each transformation in turn and returning a final dataset with the accumulation of the transform practical to it, in this case oversampling followed past undersampling.

| . . . steps = [ ( 'o' , over ) , ( 'u' , nether ) ] pipeline = Pipeline ( steps = steps ) |

The pipeline tin can and then be fit and applied to our dataset just similar a unmarried transform:

| . . . # transform the dataset 10 , y = pipeline . fit_resample ( X , y ) |

We tin can then summarize and plot the resulting dataset.

We would expect some SMOTE oversampling of the minority form, although not every bit much as before where the dataset was balanced. Nosotros besides expect fewer examples in the majority class via random undersampling.

Tying this all together, the consummate example is listed below.

| ane 2 3 4 5 6 7 8 9 10 11 12 thirteen 14 15 sixteen 17 18 19 xx 21 22 23 24 25 26 27 28 29 30 | # Oversample with SMOTE and random undersample for imbalanced dataset from collections import Counter from sklearn . datasets import make_classification from imblearn . over_sampling import SMOTE from imblearn . under_sampling import RandomUnderSampler from imblearn . pipeline import Pipeline from matplotlib import pyplot from numpy import where # define dataset X , y = make_classification ( n_samples = 10000 , n_features = 2 , n_redundant = 0 , n_clusters_per_class = 1 , weights = [ 0.99 ] , flip_y = 0 , random_state = 1 ) # summarize class distribution counter = Counter ( y ) impress ( counter ) # define pipeline over = SMOTE ( sampling_strategy = 0.1 ) under = RandomUnderSampler ( sampling_strategy = 0.5 ) steps = [ ( 'o' , over ) , ( 'u' , under ) ] pipeline = Pipeline ( steps = steps ) # transform the dataset Ten , y = pipeline . fit_resample ( X , y ) # summarize the new class distribution counter = Counter ( y ) print ( counter ) # scatter plot of examples by class characterization for label , _ in counter . items ( ) : row_ix = where ( y == label ) [ 0 ] pyplot . besprinkle ( X [ row_ix , 0 ] , 10 [ row_ix , 1 ] , label = str ( characterization ) ) pyplot . fable ( ) pyplot . show ( ) |

Running the case commencement creates the dataset and summarizes the class distribution.

Next, the dataset is transformed, get-go by oversampling the minority class, then undersampling the majority class. The final class distribution after this sequence of transforms matches our expectations with a ane:2 ratio or most ii,000 examples in the majority grade and about 1,000 examples in the minority class.

| Counter({0: 9900, ane: 100}) Counter({0: 1980, one: 990}) |

Finally, a scatter plot of the transformed dataset is created, showing the oversampled minority grade and the undersampled majority class.

Scatter Plot of Imbalanced Dataset Transformed by SMOTE and Random Undersampling

Now that we are familiar with transforming imbalanced datasets, let'southward look at using SMOTE when plumbing fixtures and evaluating nomenclature models.

SMOTE for Nomenclature

In this section, nosotros will await at how we tin employ SMOTE as a data preparation method when fitting and evaluating machine learning algorithms in scikit-larn.

First, we utilise our binary classification dataset from the previous section and so fit and evaluate a determination tree algorithm.

The algorithm is defined with any required hyperparameters (we will use the defaults), and then we will apply repeated stratified k-fold cross-validation to evaluate the model. We volition use 3 repeats of x-fold cross-validation, pregnant that ten-fold cross-validation is applied 3 times fitting and evaluating thirty models on the dataset.

The dataset is stratified, significant that each fold of the cross-validation split will accept the same class distribution every bit the original dataset, in this case, a 1:100 ratio. We will evaluate the model using the ROC area under curve (AUC) metric. This can be optimistic for severely imbalanced datasets but will nonetheless bear witness a relative change with better performing models.

| . . . # define model model = DecisionTreeClassifier ( ) # evaluate pipeline cv = RepeatedStratifiedKFold ( n_splits = 10 , n_repeats = 3 , random_state = 1 ) scores = cross_val_score ( model , 10 , y , scoring = 'roc_auc' , cv = cv , n_jobs = - i ) |

One time fit, we can calculate and written report the hateful of the scores beyond the folds and repeats.

| . . . print ( 'Mean ROC AUC: %.3f' % hateful ( scores ) ) |

We would not await a determination tree fit on the raw imbalanced dataset to perform very well.

Tying this together, the complete instance is listed beneath.

| # conclusion tree evaluated on imbalanced dataset from numpy import mean from sklearn . datasets import make_classification from sklearn . model_selection import cross_val_score from sklearn . model_selection import RepeatedStratifiedKFold from sklearn . tree import DecisionTreeClassifier # define dataset X , y = make_classification ( n_samples = 10000 , n_features = two , n_redundant = 0 , n_clusters_per_class = i , weights = [ 0.99 ] , flip_y = 0 , random_state = 1 ) # define model model = DecisionTreeClassifier ( ) # evaluate pipeline cv = RepeatedStratifiedKFold ( n_splits = x , n_repeats = iii , random_state = 1 ) scores = cross_val_score ( model , X , y , scoring = 'roc_auc' , cv = cv , n_jobs = - one ) print ( 'Mean ROC AUC: %.3f' % hateful ( scores ) ) |

Running the instance evaluates the model and reports the mean ROC AUC.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the boilerplate upshot.

In this instance, we tin come across that a ROC AUC of near 0.76 is reported.

Now, we can attempt the same model and the aforementioned evaluation method, although utilize a SMOTE transformed version of the dataset.

The correct application of oversampling during k-fold cross-validation is to apply the method to the training dataset only, so evaluate the model on the stratified but non-transformed test set.

This tin can be achieved by defining a Pipeline that offset transforms the training dataset with SMOTE and then fits the model.

| . . . # define pipeline steps = [ ( 'over' , SMOTE ( ) ) , ( 'model' , DecisionTreeClassifier ( ) ) ] pipeline = Pipeline ( steps = steps ) |

This pipeline can then exist evaluated using repeated k-fold cross-validation.

Tying this together, the consummate instance of evaluating a conclusion tree with SMOTE oversampling on the training dataset is listed below.

| 1 2 3 4 5 vi 7 8 9 x 11 12 13 14 xv 16 17 18 | # decision tree evaluated on imbalanced dataset with SMOTE oversampling from numpy import hateful from sklearn . datasets import make_classification from sklearn . model_selection import cross_val_score from sklearn . model_selection import RepeatedStratifiedKFold from sklearn . tree import DecisionTreeClassifier from imblearn . pipeline import Pipeline from imblearn . over_sampling import SMOTE # define dataset X , y = make_classification ( n_samples = 10000 , n_features = two , n_redundant = 0 , n_clusters_per_class = i , weights = [ 0.99 ] , flip_y = 0 , random_state = i ) # ascertain pipeline steps = [ ( 'over' , SMOTE ( ) ) , ( 'model' , DecisionTreeClassifier ( ) ) ] pipeline = Pipeline ( steps = steps ) # evaluate pipeline cv = RepeatedStratifiedKFold ( n_splits = 10 , n_repeats = 3 , random_state = 1 ) scores = cross_val_score ( pipeline , X , y , scoring = 'roc_auc' , cv = cv , n_jobs = - i ) print ( 'Mean ROC AUC: %.3f' % mean ( scores ) ) |

Running the example evaluates the model and reports the mean ROC AUC score across the multiple folds and repeats.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the boilerplate event.

In this case, we can see a small-scale improvement in performance from a ROC AUC of about 0.76 to almost 0.80.

Every bit mentioned in the paper, information technology is believed that SMOTE performs better when combined with undersampling of the majority grade, such as random undersampling.

Nosotros tin can accomplish this by simply adding a RandomUnderSampler step to the Pipeline.

Every bit in the previous department, we volition first oversample the minority class with SMOTE to about a 1:10 ratio, then undersample the majority course to achieve near a i:2 ratio.

| . . . # define pipeline model = DecisionTreeClassifier ( ) over = SMOTE ( sampling_strategy = 0.1 ) under = RandomUnderSampler ( sampling_strategy = 0.5 ) steps = [ ( 'over' , over ) , ( 'under' , under ) , ( 'model' , model ) ] pipeline = Pipeline ( steps = steps ) |

Tying this together, the complete example is listed beneath.

| ane ii 3 4 5 vi vii 8 ix x 11 12 13 xiv 15 16 17 18 nineteen 20 21 22 | # decision tree on imbalanced dataset with SMOTE oversampling and random undersampling from numpy import hateful from sklearn . datasets import make_classification from sklearn . model_selection import cross_val_score from sklearn . model_selection import RepeatedStratifiedKFold from sklearn . tree import DecisionTreeClassifier from imblearn . pipeline import Pipeline from imblearn . over_sampling import SMOTE from imblearn . under_sampling import RandomUnderSampler # ascertain dataset X , y = make_classification ( n_samples = 10000 , n_features = 2 , n_redundant = 0 , n_clusters_per_class = 1 , weights = [ 0.99 ] , flip_y = 0 , random_state = ane ) # define pipeline model = DecisionTreeClassifier ( ) over = SMOTE ( sampling_strategy = 0.i ) nether = RandomUnderSampler ( sampling_strategy = 0.5 ) steps = [ ( 'over' , over ) , ( 'under' , under ) , ( 'model' , model ) ] pipeline = Pipeline ( steps = steps ) # evaluate pipeline cv = RepeatedStratifiedKFold ( n_splits = x , n_repeats = 3 , random_state = ane ) scores = cross_val_score ( pipeline , X , y , scoring = 'roc_auc' , cv = cv , n_jobs = - 1 ) print ( 'Hateful ROC AUC: %.3f' % mean ( scores ) ) |

Running the case evaluates the model with the pipeline of SMOTE oversampling and random undersampling on the training dataset.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation process, or differences in numerical precision. Consider running the case a few times and compare the boilerplate result.

In this case, nosotros can run into that the reported ROC AUC shows an additional elevator to about 0.83.

You could explore testing different ratios of the minority form and majority course (e.yard. changing the sampling_strategy argument) to see if a further lift in performance is possible.

Some other area to explore would be to examination unlike values of the k-nearest neighbors selected in the SMOTE procedure when each new synthetic case is created. The default is one thousand=5, although larger or smaller values volition influence the types of examples created, and in plough, may bear on the performance of the model.

For example, we could grid search a range of values of yard, such as values from one to 7, and evaluate the pipeline for each value.

| . . . # values to evaluate k_values = [ i , 2 , three , 4 , 5 , half-dozen , 7 ] for k in k_values : # ascertain pipeline . . . |

The complete example is listed below.

| 1 2 3 iv 5 vi seven 8 ix ten 11 12 13 14 fifteen 16 17 xviii 19 20 21 22 23 24 25 26 | # grid search 1000 value for SMOTE oversampling for imbalanced classification from numpy import hateful from sklearn . datasets import make_classification from sklearn . model_selection import cross_val_score from sklearn . model_selection import RepeatedStratifiedKFold from sklearn . tree import DecisionTreeClassifier from imblearn . pipeline import Pipeline from imblearn . over_sampling import SMOTE from imblearn . under_sampling import RandomUnderSampler # define dataset X , y = make_classification ( n_samples = 10000 , n_features = 2 , n_redundant = 0 , n_clusters_per_class = i , weights = [ 0.99 ] , flip_y = 0 , random_state = i ) # values to evaluate k_values = [ ane , two , 3 , 4 , 5 , 6 , 7 ] for 1000 in k_values : # define pipeline model = DecisionTreeClassifier ( ) over = SMOTE ( sampling_strategy = 0.1 , k_neighbors = chiliad ) under = RandomUnderSampler ( sampling_strategy = 0.5 ) steps = [ ( 'over' , over ) , ( 'under' , under ) , ( 'model' , model ) ] pipeline = Pipeline ( steps = steps ) # evaluate pipeline cv = RepeatedStratifiedKFold ( n_splits = 10 , n_repeats = iii , random_state = 1 ) scores = cross_val_score ( pipeline , X , y , scoring = 'roc_auc' , cv = cv , n_jobs = - 1 ) score = hateful ( scores ) print ( '> 1000=%d, Mean ROC AUC: %.3f' % ( one thousand , score ) ) |

Running the example will perform SMOTE oversampling with unlike k values for the KNN used in the procedure, followed by random undersampling and plumbing fixtures a determination tree on the resulting training dataset.

The mean ROC AUC is reported for each configuration.

Notation: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the boilerplate outcome.

In this case, the results suggest that a k=3 might be good with a ROC AUC of almost 0.84, and k=7 might too be skilful with a ROC AUC of about 0.85.

This highlights that both the amount of oversampling and undersampling performed (sampling_strategy argument) and the number of examples selected from which a partner is chosen to create a constructed instance (k_neighbors) may exist important parameters to select and melody for your dataset.

| > chiliad=1, Mean ROC AUC: 0.827 > k=2, Hateful ROC AUC: 0.823 > one thousand=three, Hateful ROC AUC: 0.834 > 1000=4, Mean ROC AUC: 0.840 > k=5, Mean ROC AUC: 0.839 > 1000=six, Hateful ROC AUC: 0.839 > thousand=7, Mean ROC AUC: 0.853 |

Now that we are familiar with how to use SMOTE when fitting and evaluating classification models, let's wait at some extensions of the SMOTE process.

SMOTE With Selective Synthetic Sample Generation

We can be selective near the examples in the minority course that are oversampled using SMOTE.

In this department, we will review some extensions to SMOTE that are more than selective regarding the examples from the minority class that provide the basis for generating new constructed examples.

Deadline-SMOTE

A pop extension to SMOTE involves selecting those instances of the minority grade that are misclassified, such as with a k-nearest neighbor nomenclature model.

We can then oversample only those difficult instances, providing more resolution only where it may be required.

The examples on the deadline and the ones nearby […] are more apt to be misclassified than the ones far from the borderline, and thus more important for nomenclature.

— Deadline-SMOTE: A New Over-Sampling Method in Imbalanced Information Sets Learning, 2005.

These examples that are misclassified are likely ambiguous and in a region of the edge or border of determination purlieus where class membership may overlap. Equally such, this modified to SMOTE is chosen Borderline-SMOTE and was proposed by Hui Han, et al. in their 2005 paper titled "Borderline-SMOTE: A New Over-Sampling Method in Imbalanced Data Sets Learning."

The authors also describe a version of the method that as well oversampled the majority course for those examples that cause a misclassification of deadline instances in the minority course. This is referred to as Borderline-SMOTE1, whereas the oversampling of only the borderline cases in minority class is referred to as Borderline-SMOTE2.

Deadline-SMOTE2 non merely generates synthetic examples from each instance in DANGER and its positive nearest neighbors in P, but too does that from its nearest negative neighbour in N.

— Borderline-SMOTE: A New Over-Sampling Method in Imbalanced Information Sets Learning, 2005.

We can implement Borderline-SMOTE1 using the BorderlineSMOTE class from imbalanced-learn.

We tin can demonstrate the technique on the constructed binary classification trouble used in the previous sections.

Instead of generating new synthetic examples for the minority class blindly, we would await the Borderline-SMOTE method to merely create constructed examples forth the decision purlieus betwixt the two classes.

The complete example of using Borderline-SMOTE to oversample binary classification datasets is listed below.

| 1 ii 3 4 5 half dozen 7 8 9 10 eleven 12 xiii 14 15 16 17 18 19 20 21 22 23 24 | # deadline-SMOTE for imbalanced dataset from collections import Counter from sklearn . datasets import make_classification from imblearn . over_sampling import BorderlineSMOTE from matplotlib import pyplot from numpy import where # define dataset X , y = make_classification ( n_samples = 10000 , n_features = 2 , n_redundant = 0 , n_clusters_per_class = 1 , weights = [ 0.99 ] , flip_y = 0 , random_state = i ) # summarize class distribution counter = Counter ( y ) print ( counter ) # transform the dataset oversample = BorderlineSMOTE ( ) X , y = oversample . fit_resample ( X , y ) # summarize the new class distribution counter = Counter ( y ) print ( counter ) # scatter plot of examples by grade label for label , _ in counter . items ( ) : row_ix = where ( y == label ) [ 0 ] pyplot . scatter ( X [ row_ix , 0 ] , Ten [ row_ix , 1 ] , characterization = str ( label ) ) pyplot . legend ( ) pyplot . show ( ) |

Running the case start creates the dataset and summarizes the initial class distribution, showing a one:100 human relationship.

The Deadline-SMOTE is applied to residue the class distribution, which is confirmed with the printed class summary.

| Counter({0: 9900, i: 100}) Counter({0: 9900, 1: 9900}) |

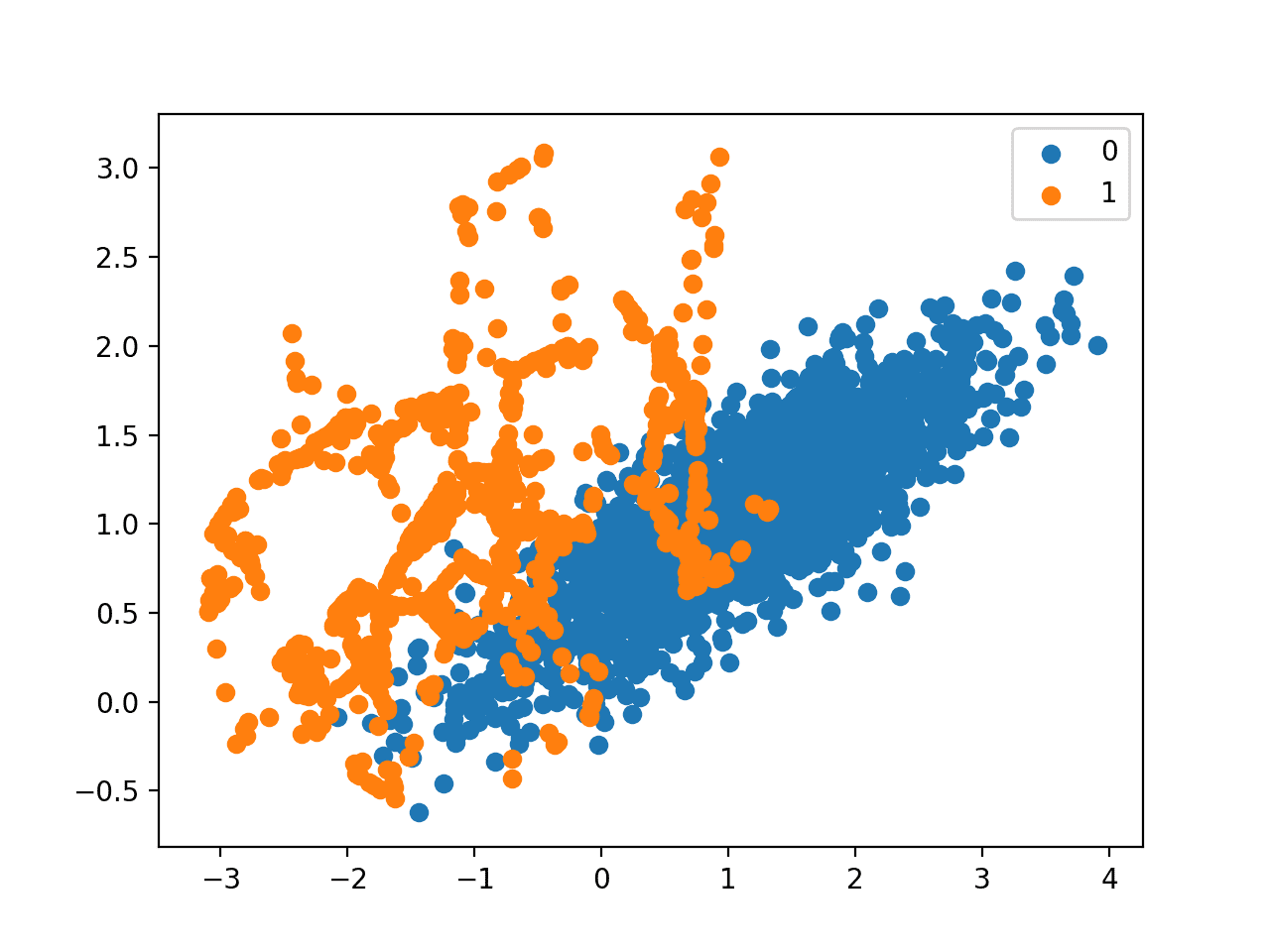

Finally, a scatter plot of the transformed dataset is created. The plot clearly shows the effect of the selective approach to oversampling. Examples along the decision boundary of the minority course are oversampled intently (orangish).

The plot shows that those examples far from the determination purlieus are not oversampled. This includes both examples that are easier to classify (those orangish points toward the top left of the plot) and those that are overwhelmingly hard to allocate given the strong class overlap (those orange points toward the bottom right of the plot).

Besprinkle Plot of Imbalanced Dataset With Borderline-SMOTE Oversampling

Deadline-SMOTE SVM

Hien Nguyen, et al. advise using an alternative of Borderline-SMOTE where an SVM algorithm is used instead of a KNN to identify misclassified examples on the decision purlieus.

Their approach is summarized in the 2009 paper titled "Deadline Over-sampling For Imbalanced Data Classification." An SVM is used to locate the decision boundary divers past the support vectors and examples in the minority class that close to the support vectors become the focus for generating synthetic examples.

… the deadline area is approximated by the support vectors obtained after training a standard SVMs classifier on the original preparation set. New instances will exist randomly created forth the lines joining each minority form support vector with a number of its nearest neighbors using the interpolation

— Borderline Over-sampling For Imbalanced Information Classification, 2009.

In addition to using an SVM, the technique attempts to select regions where in that location are fewer examples of the minority grade and tries to extrapolate towards the class boundary.

If majority class instances count for less than a half of its nearest neighbors, new instances volition exist created with extrapolation to aggrandize minority class area toward the majority grade.

— Borderline Over-sampling For Imbalanced Data Classification, 2009.

This variation can exist implemented via the SVMSMOTE class from the imbalanced-larn library.

The instance below demonstrates this alternative approach to Deadline SMOTE on the aforementioned imbalanced dataset.

| 1 two 3 4 5 6 7 8 ix 10 xi 12 13 14 15 sixteen 17 xviii 19 20 21 22 23 24 | # borderline-SMOTE with SVM for imbalanced dataset from collections import Counter from sklearn . datasets import make_classification from imblearn . over_sampling import SVMSMOTE from matplotlib import pyplot from numpy import where # define dataset X , y = make_classification ( n_samples = 10000 , n_features = ii , n_redundant = 0 , n_clusters_per_class = 1 , weights = [ 0.99 ] , flip_y = 0 , random_state = 1 ) # summarize class distribution counter = Counter ( y ) print ( counter ) # transform the dataset oversample = SVMSMOTE ( ) X , y = oversample . fit_resample ( X , y ) # summarize the new grade distribution counter = Counter ( y ) impress ( counter ) # scatter plot of examples by form label for label , _ in counter . items ( ) : row_ix = where ( y == characterization ) [ 0 ] pyplot . scatter ( X [ row_ix , 0 ] , X [ row_ix , 1 ] , label = str ( label ) ) pyplot . legend ( ) pyplot . show ( ) |

Running the example showtime summarizes the raw course distribution, and so the balanced course distribution after applying Borderline-SMOTE with an SVM model.

| Counter({0: 9900, 1: 100}) Counter({0: 9900, 1: 9900}) |

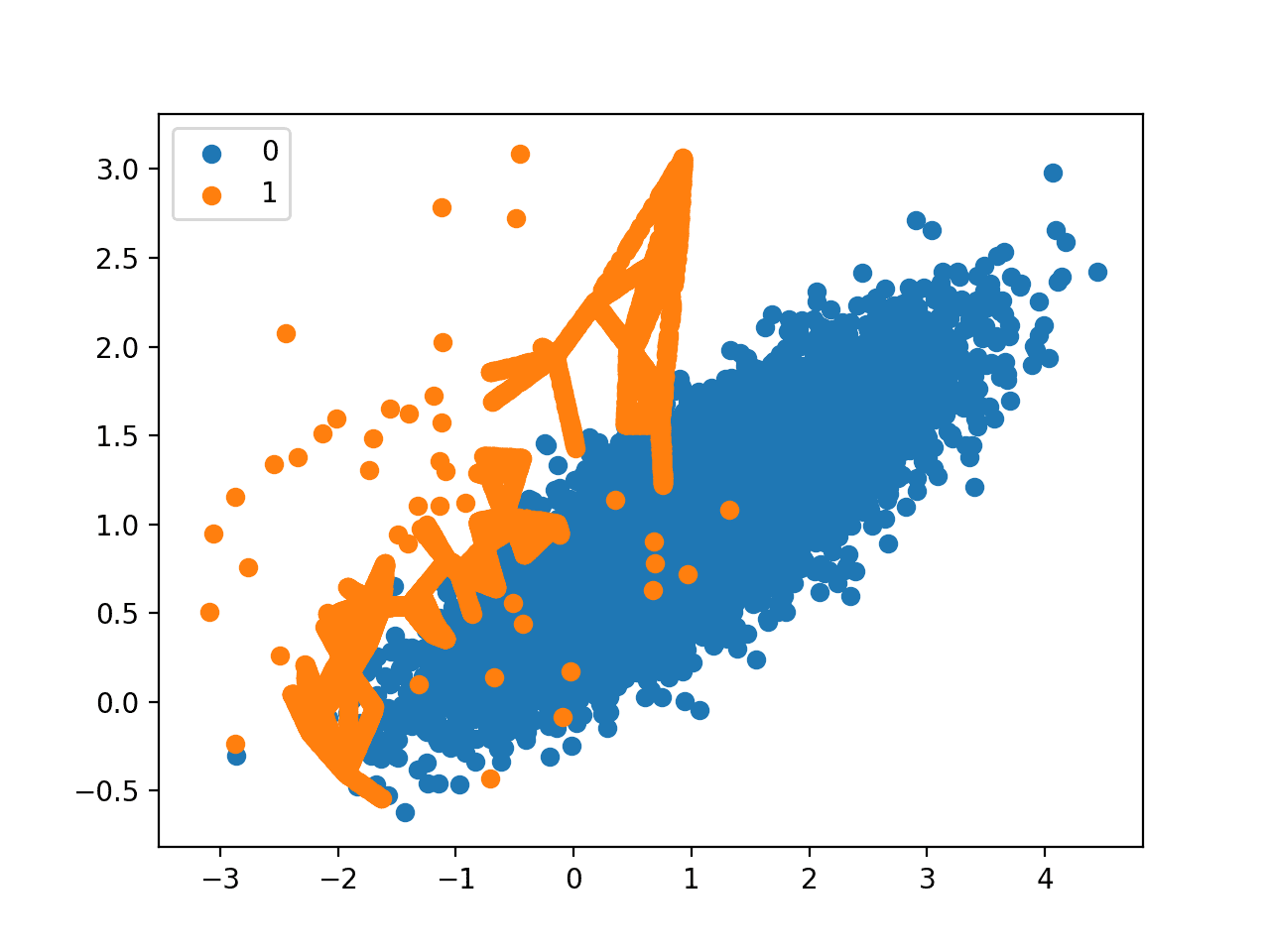

A besprinkle plot of the dataset is created showing the directed oversampling along the decision boundary with the majority class.

We can likewise see that unlike Borderline-SMOTE, more examples are synthesized away from the region of course overlap, such as toward the top left of the plot.

Scatter Plot of Imbalanced Dataset With Deadline-SMOTE Oversampling With SVM

Adaptive Synthetic Sampling (ADASYN)

Another approach involves generating synthetic samples inversely proportional to the density of the examples in the minority grade.

That is, generate more constructed examples in regions of the feature space where the density of minority examples is low, and fewer or none where the density is high.

This modification to SMOTE is referred to as the Adaptive Synthetic Sampling Method, or ADASYN, and was proposed to Haibo He, et al. in their 2008 paper named for the method titled "ADASYN: Adaptive Synthetic Sampling Approach For Imbalanced Learning."

ADASYN is based on the thought of adaptively generating minority data samples according to their distributions: more than synthetic information is generated for minority class samples that are harder to learn compared to those minority samples that are easier to learn.

— ADASYN: Adaptive synthetic sampling approach for imbalanced learning, 2008.

With online Deadline-SMOTE, a discriminative model is non created. Instead, examples in the minority form are weighted according to their density, then those examples with the lowest density are the focus for the SMOTE synthetic example generation process.

The key idea of ADASYN algorithm is to use a density distribution equally a criterion to automatically decide the number of synthetic samples that need to be generated for each minority information example.

— ADASYN: Adaptive synthetic sampling approach for imbalanced learning, 2008.

We tin implement this procedure using the ADASYN class in the imbalanced-learn library.

The example below demonstrates this alternative approach to oversampling on the imbalanced binary classification dataset.

| 1 2 3 4 v 6 seven 8 ix 10 xi 12 xiii fourteen xv 16 17 18 19 20 21 22 23 24 | # Oversample and plot imbalanced dataset with ADASYN from collections import Counter from sklearn . datasets import make_classification from imblearn . over_sampling import ADASYN from matplotlib import pyplot from numpy import where # ascertain dataset X , y = make_classification ( n_samples = 10000 , n_features = 2 , n_redundant = 0 , n_clusters_per_class = 1 , weights = [ 0.99 ] , flip_y = 0 , random_state = 1 ) # summarize grade distribution counter = Counter ( y ) print ( counter ) # transform the dataset oversample = ADASYN ( ) X , y = oversample . fit_resample ( Ten , y ) # summarize the new class distribution counter = Counter ( y ) print ( counter ) # scatter plot of examples past class characterization for characterization , _ in counter . items ( ) : row_ix = where ( y == characterization ) [ 0 ] pyplot . besprinkle ( X [ row_ix , 0 ] , Ten [ row_ix , 1 ] , characterization = str ( label ) ) pyplot . legend ( ) pyplot . show ( ) |

Running the example showtime creates the dataset and summarizes the initial class distribution, then the updated class distribution after oversampling was performed.

| Counter({0: 9900, i: 100}) Counter({0: 9900, ane: 9899}) |

A scatter plot of the transformed dataset is created. Like Borderline-SMOTE, nosotros can meet that synthetic sample generation is focused effectually the decision boundary as this region has the lowest density.

Unlike Borderline-SMOTE, we can see that the examples that have the virtually class overlap accept the most focus. On problems where these low density examples might be outliers, the ADASYN approach may put too much attention on these areas of the feature space, which may result in worse model operation.

It may help to remove outliers prior to applying the oversampling procedure, and this might be a helpful heuristic to use more more often than not.

Besprinkle Plot of Imbalanced Dataset With Adaptive Synthetic Sampling (ADASYN)

Further Reading

This section provides more resources on the topic if y'all are looking to go deeper.

Books

- Learning from Imbalanced Information Sets, 2018.

- Imbalanced Learning: Foundations, Algorithms, and Applications, 2013.

Papers

- SMOTE: Synthetic Minority Over-sampling Technique, 2002.

- Borderline-SMOTE: A New Over-Sampling Method in Imbalanced Information Sets Learning, 2005.

- Deadline Over-sampling For Imbalanced Data Classification, 2009.

- ADASYN: Adaptive Synthetic Sampling Approach For Imbalanced Learning, 2008.

API

- imblearn.over_sampling.SMOTE API.

- imblearn.over_sampling.SMOTENC API.

- imblearn.over_sampling.BorderlineSMOTE API.

- imblearn.over_sampling.SVMSMOTE API.

- imblearn.over_sampling.ADASYN API.

Articles

- Oversampling and undersampling in data analysis, Wikipedia.

Summary

In this tutorial, yous discovered the SMOTE for oversampling imbalanced classification datasets.

Specifically, yous learned:

- How the SMOTE synthesizes new examples for the minority form.

- How to correctly fit and evaluate machine learning models on SMOTE-transformed training datasets.

- How to utilise extensions of the SMOTE that generate constructed examples along the grade decision purlieus.

Exercise you have any questions?

Ask your questions in the comments below and I will practice my best to answer.

Source: https://machinelearningmastery.com/smote-oversampling-for-imbalanced-classification/

Post a Comment for "__________ Can Cause Both Shaking, Restless Movements and Slow, Imbalanced Ones."